About Everlyn AI

Everlyn AI is the world’s first decentralized infrastructure layer for autonomous video generation, combining breakthrough performance with on-chain transparency. Built to democratize access to advanced video AI tools, Everlyn enables users to transform text, images, or audio prompts into high-quality videos—all within seconds. As a fully open platform, it eliminates the bottlenecks of closed proprietary systems, offering video AI that is fast, expressive, and accessible to everyone.

With real-time generation speeds up to 15x faster than industry standards, Everlyn AI positions itself as a leading force in the intersection of AI and Web3. Users can connect wallets, generate content with agents like Text-to-Video and Image-to-Video, and participate in a decentralized ecosystem where performance, privacy, and ownership go hand-in-hand.

Everlyn AI is pioneering a bold vision for decentralized AI video infrastructure. Rather than being a centralized service, Everlyn acts as an open-source protocol designed to accelerate the creation and sharing of AI-generated video content. At the core of the platform is an autoregressive foundational video model capable of interpreting multimodal prompts—text, images, or audio—and converting them into cinematic visuals in real time.

Unlike traditional closed video AI systems, Everlyn AI brings together a community of researchers, developers, and users to build a composable, scalable ecosystem. Contributions come from top AI experts, including PhD researchers and professors from globally recognized institutions. Key innovations include ACFoley for lifelike audio, WanFM for frame interpolation, PhysHPO and AlignVid for motion and realism, and a custom video model training pipeline for fine-tuned control.

From 2025 onward, Everlyn will progress through a multi-phase roadmap: integrating generative gaming, immersive interactions, and a decentralized video intelligence layer through the Lyn Protocol. This protocol will store latent vectors and seeds on-chain—introducing verifiable, on-chain video ownership and enabling video agents to operate independently within blockchain networks. This makes Everlyn a critical bridge between generative AI and Web3, a distinction few platforms can claim.

In a space shared with competitors like Runway ML, Pika, and Synthesia, Everlyn sets itself apart by offering an open, decentralized alternative with real-time generation, composability, and on-chain provenance. It's not just about creating video—it's about creating a foundation where video AI is autonomous, verifiable, and universally accessible.

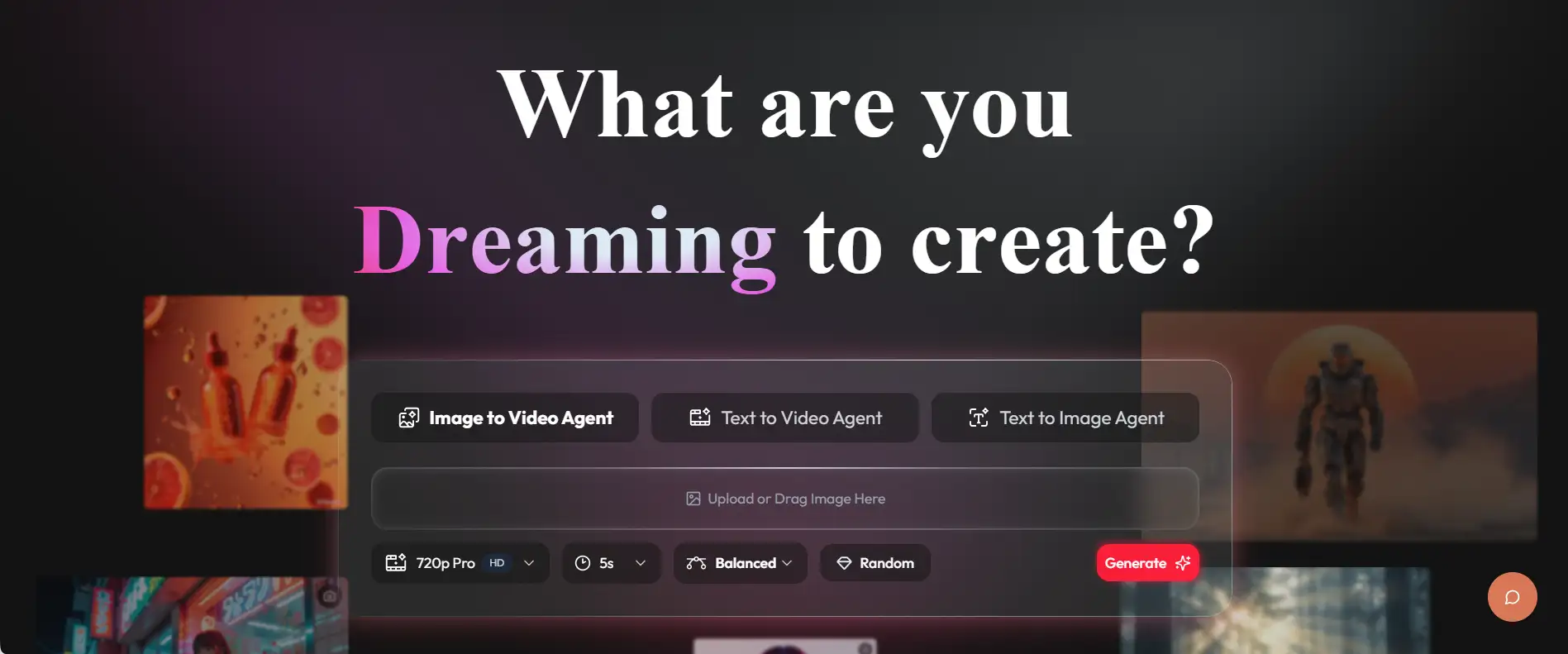

Everlyn AI delivers groundbreaking features and capabilities that redefine what's possible with generative video:

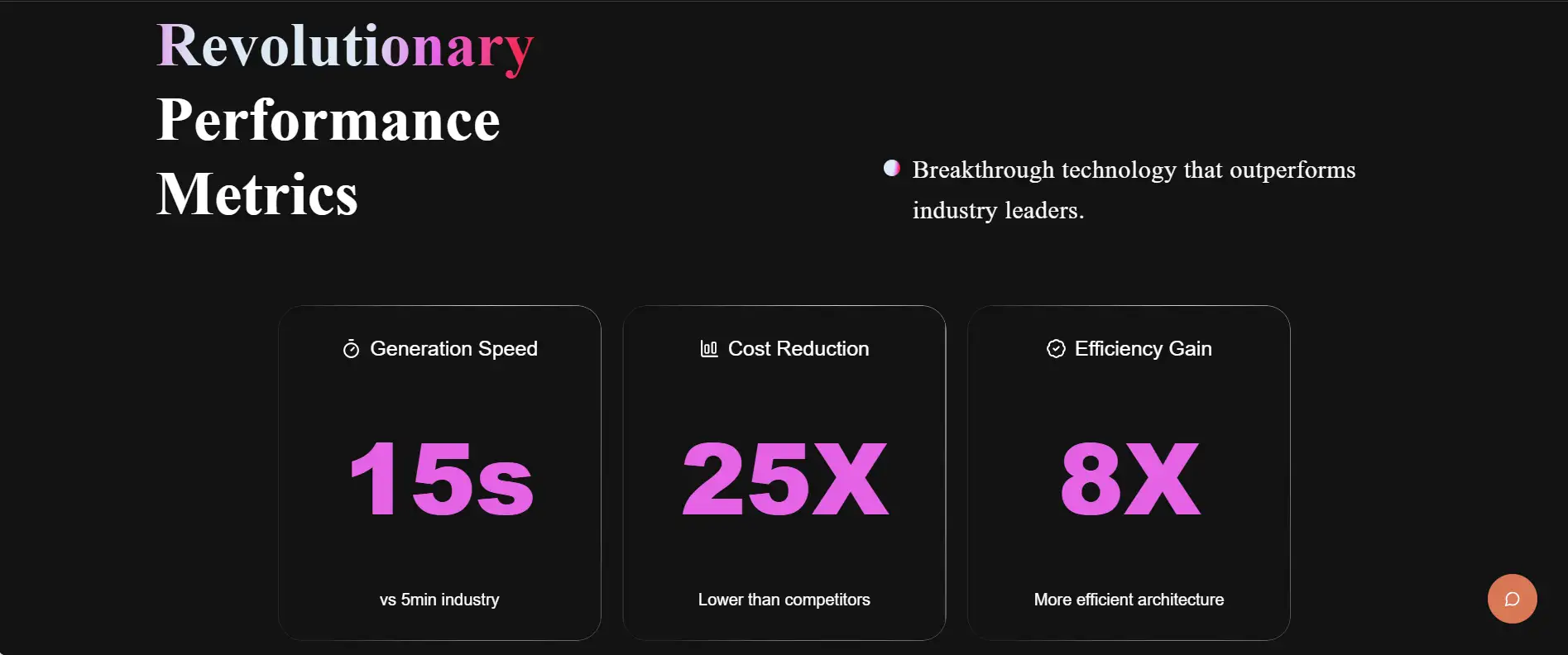

- Fastest Video Generation: Videos are generated in as little as 15 seconds, compared to several minutes on other platforms.

- Text-to-Video & Image-to-Video Agents: Multiple generative agents allow users to input creative prompts and turn them into dynamic visual content.

- Open Infrastructure: Unlike proprietary platforms, Everlyn AI is open-source and decentralized, allowing developers to build and extend its capabilities.

- On-Chain Video Intelligence: Through the Lyn Protocol, latent vectors and seeds are stored on-chain, enabling traceable, composable video assets.

- Efficient Architecture: Everlyn’s int4 quantization and model optimization deliver up to 8x more efficiency and 25x cost reduction versus competitors.

- Real-Time Agents: Everlyn is building adaptive video agents that respond to real-time user interaction, motion, and sound.

- Developer Ecosystem: The platform supports APIs for fine-tuning, integrating, and simulating intelligent video environments.

Everlyn AI is designed to make powerful generative video accessible to everyone—from artists and developers to researchers and brands. Here’s how to get started:

- Step 1 – Visit the Platform: Go to everlyn.ai to explore the homepage and available agents.

- Step 2 – Connect Your Wallet: Click “Connect Wallet” in the top menu to sign in securely and prepare your workspace.

- Step 3 – Choose an Agent: Select a generative agent such as Text-to-Video or Image-to-Video. You can upload an image or type a prompt.

- Step 4 – Configure Settings: Adjust resolution (e.g. 720p), style (Balanced, Realistic, etc.), and video duration before generating.

- Step 5 – Generate Your Video: Click “Generate” to create your video. Output is typically ready in 10–15 seconds.

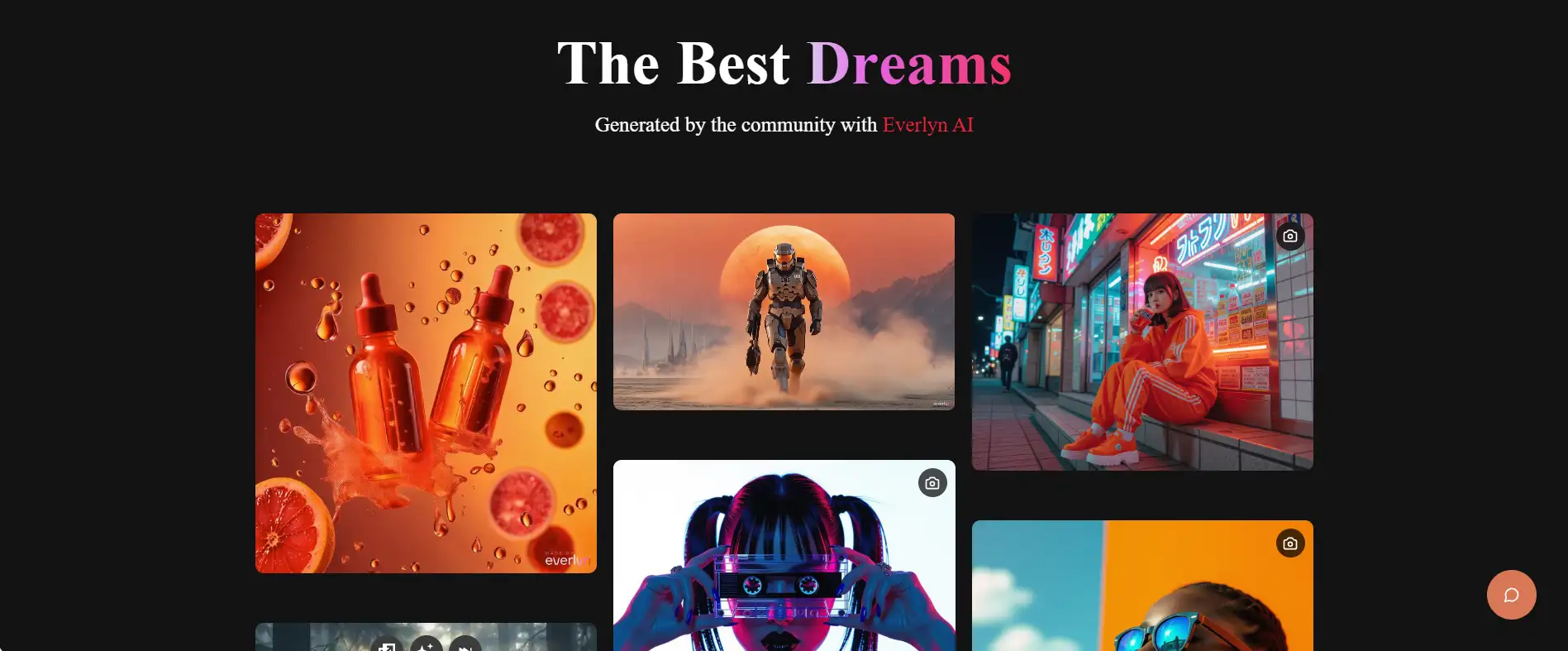

- Step 6 – Explore More: Check the Showcase to view community creations, or visit the Everlyn Docs for advanced API and developer tools.

Everlyn AI FAQ

Everlyn AI offers some of the fastest video generation speeds in the world thanks to its highly optimized int4 quantization system, efficient autoregressive architecture, and custom training pipeline. These innovations dramatically reduce the computational load required to generate high‑quality frames. Unlike conventional platforms that rely on heavy, centralized compute stacks, Everlyn AI distributes tasks across its decentralized infrastructure layer, allowing users to render videos in as little as 15 seconds—far faster than the multi‑minute runtimes seen in competing video models.

Everlyn AI distinguishes itself by being the first decentralized infrastructure layer for autonomous video AI, offering on‑chain provenance and open‑source accessibility. While centralized platforms like Runway or Pika restrict model access and store generated assets in closed ecosystems, Everlyn AI enables composability, developer integration, and transparent model evolution. Through features such as real‑time avatars, lifelike audio, and a Web3‑centric design, Everlyn gives creators more control, ownership, and freedom to build on top of its foundational video models.

The upcoming Lyn Protocol transforms video ownership by storing latent vectors and generation seeds on-chain, making each AI‑generated asset verifiable, traceable, and fully composable. Instead of relying on centralized servers, creators using Everlyn AI gain permanent, transparent provenance for their videos. This enables new on-chain use cases such as verifiable creative history, collaborative asset generation, and autonomous agents capable of interacting with blockchain environments through video data.

Yes. Everlyn AI is committed to open-source principles, allowing developers to extend, fine‑tune, or integrate its foundational video model into custom applications. With an expanding developer ecosystem and accessible tooling provided through the Everlyn Docs, teams can modify model pipelines, build creative agents, or research new video AI capabilities. This openness makes Everlyn an ideal choice for innovators exploring generative gaming, intelligent worlds, or simulation‑based creativity.

Everlyn AI provides a suite of generative agents tailored for different workflows, including Text‑to‑Video, Image‑to‑Video, and Text‑to‑Image creation. These agents allow users to start from written prompts, uploaded images, or conceptual ideas and transform them into dynamic visuals within seconds. Each agent is powered by Everlyn’s high‑performance video model, ensuring fast, expressive results. Users can access all agents through the main dashboard at everlyn.ai and configure resolution, duration, and style to match their creative goals.

You Might Also Like